Abstract

TL;DR: We propose a perception-aware, minimum-time, vision-based navigation method to fly a quadrotor through a cluttered environment, which combines reinforcement learning and imitation learning by leveraging a learning-by-cheating framework .

Recently, neural control policies have outperformed existing model-based planning-and-control methods for autonomously navigating quadrotors through cluttered environments in minimum time. However, they are not perception aware, a crucial requirement in vision-based navigation due to the camera's limited field of view and the underactuated nature of a quadrotor. We propose a method to learn neural network policies that achieve perception-aware, minimum-time flight in cluttered environments. Our method combines imitation learning and reinforcement learning (RL) by leveraging a privileged learning-by-cheating framework. Using RL, we first train a perception-aware teacher policy with full-state information to fly in minimum time through cluttered environments. Then, we use imitation learning to distill its knowledge into a vision-based student policy that only perceives the environment via a camera. Our approach tightly couples perception and control, showing a significant advantage in computation speed (10x faster) and success rate. We demonstrate the closed-loop control performance using a physical quadrotor and hardware-in-the-loop simulation at speeds up to 50km/h.

Video

Overview

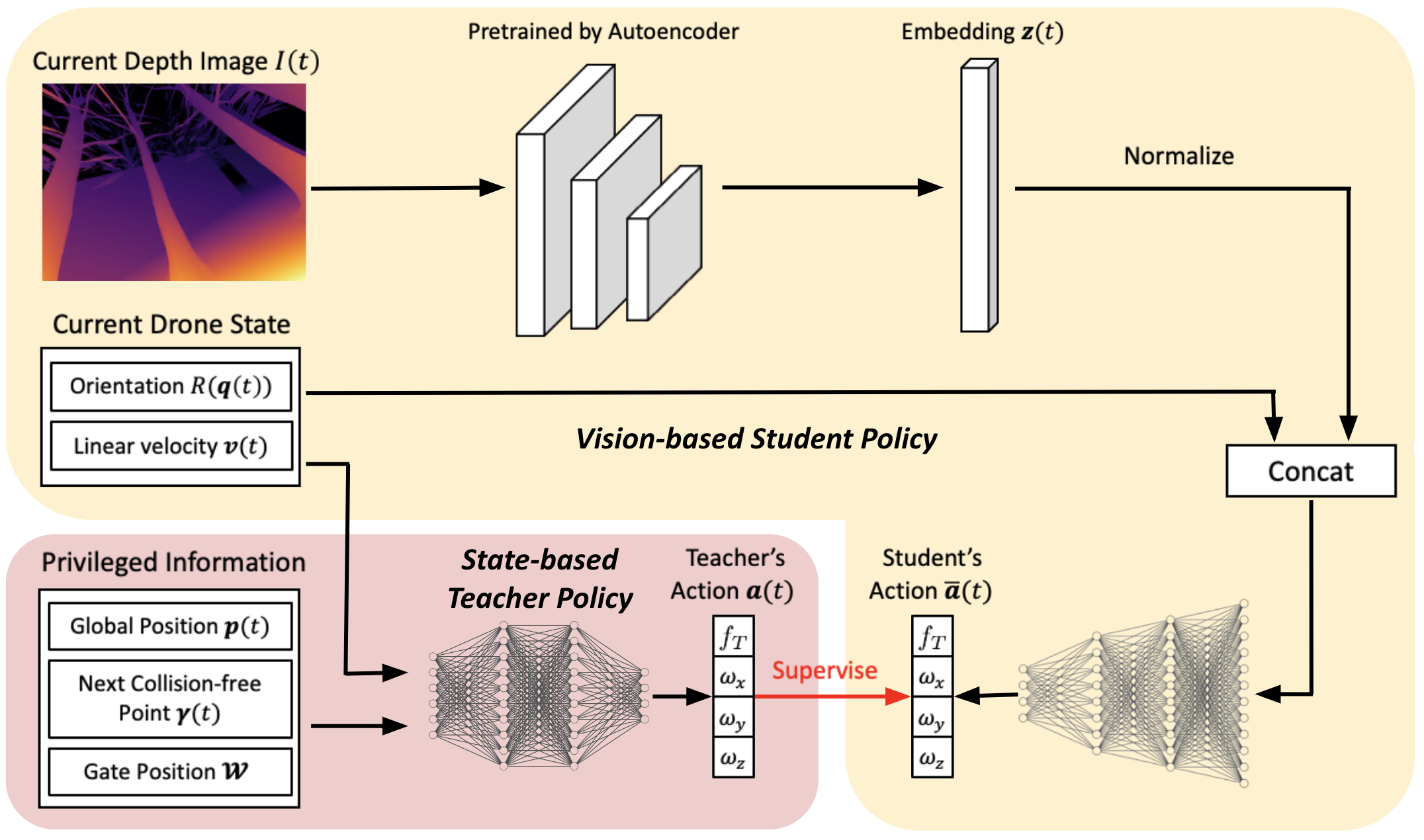

Training: We first train a state-based teacher policy using model-free deep reinforcement learning, in which the policy has access to privileged information about the vehicle’s state and its surrounding environment. This teacher policy is then distilled into a vision-based student policy that does not rely on privileged information. Training is finished in Flightmare.

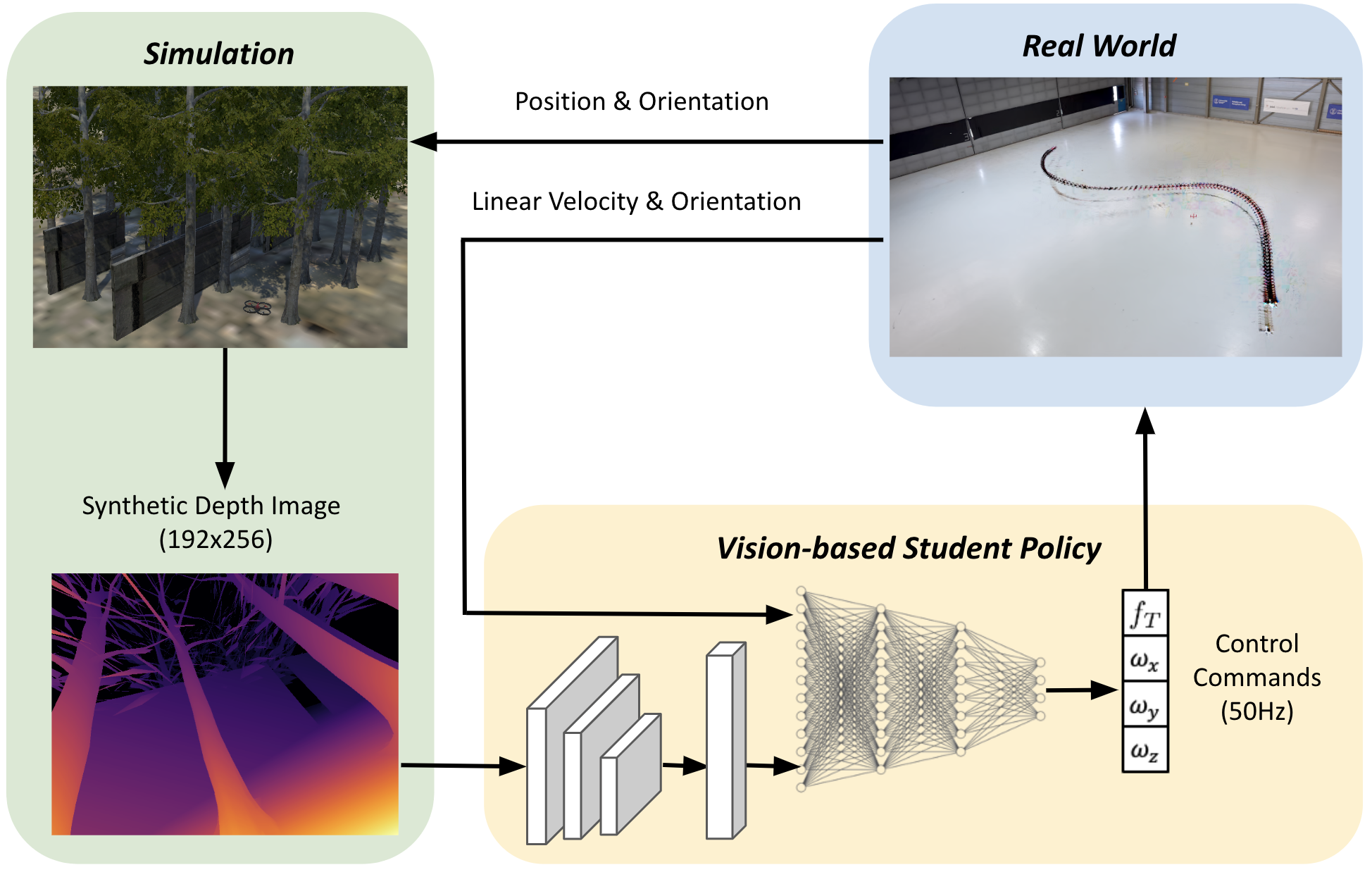

Deployment: We deploy the vision-based policy in real world via hardware-in-the-loop (HITL) simulation directly, which consists of flying a physical quadrotor in a motion-capture system while observing virtual photorealistic environments that are updated in real-time.

Learning State-based Teacher Policy

Learning Vision-based Student Policy

Real-World Deployment

BibTex

@misc{https://doi.org/10.48550/arxiv.2210.01841,

doi = {10.48550/ARXIV.2210.01841},

url = {https://arxiv.org/abs/2210.01841},

author = {Song, Yunlong and Shi, Kexin and Penicka, Robert and Scaramuzza, Davide},

keywords = {Robotics (cs.RO), Artificial Intelligence (cs.AI), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Learning Perception-Aware Agile Flight in Cluttered Environments},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}